Final projects from 2016

A Children’s CrowdStory by

Shorya Mantry

, Jon Liu

, Dak Song

Video profile: http://player.vimeo.com/video/165384258"

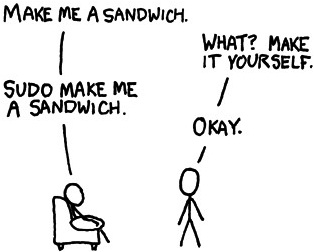

Give a one sentence description of your project. The goal of “A Children’s CrowdStory” is to write a full children’s book using crowd workers.What type of project is it? A business idea that uses crowdsourcing What similar projects exist? Scott Klososky created a book using crowdsourcing, on the power of crowdsourcing. The book is titled, “Enterprise Social Technology: Helping Organizations Harness the Power of Social Media, Social Networking, Social Relevance.” The book is co-authored by experts in the field, and every other aspect of the book, such as the cover, was crowdsourced.

https://www.dropbox.com/s/0gxtsr8105qvcyw/Rate.png?dl=0 In the quality control module, all workers are paid the same amount 5 cents for rating the sentences. We wanted to keep the pay the same across all workers, as the only differentiating factor for the quality model should be the ratings, not the incentives to do the ratings. When comparing the workers who were paid 2 cents to the workers who were paid 5 cents, there was huge jump in average interest rating. The difference was 0.85. However, between workers who were paid 5 cents and workers who were paid 10 cents, the difference in rating was only 0.05. We analyzed this as a diminishing marginal returns, meaning that at a certain point, more pay wouldn’t make much of an increase in interest rating. https://www.dropbox.com/s/ofjo1krje78h5yg/Pay_Thought-Provoking.png?dl=0 In this analysis, we discovered that although there was an increase in thought-provoking ratings as pay increased, there wasn’t as much difference between the ratings. We concluded that this stemmed from the fact that the skill level of writing stories was around the same for all the crowd workers. Although there were some workers with weak vocabulary or weak English, among the English writers, no matter how much pay the worker was being paid, the worker would be limited by his or her skill level. Therefore, since we assumed that all the workers would have around the same skill level, the fact that the ratings on thought-provoking were similar makes sense. https://www.dropbox.com/s/ofjo1krje78h5yg/Pay_Thought-Provoking.png?dl=0

Therefore, we decided in further iterations, we would eliminate paragraphs that had an appropriateness rating averaged among the workers less than 3.5. With the paragraphs left, we would pick the paragraph with the highest average total rating to add to our ongoing story. We chose 3.5 as the cutoff, as it was clear from our aggregate analysis that paragraphs with a higher appropriateness were rated better than paragraphs with a lower appropriateness. Furthermore, our target is children, so we wanted to ensure that the final product would be story that would be appropriate for children to read. We didn’t compare aggregated responses against individual responses. What challenges would scaling to a large crowd introduce? If we wanted to do something like creating a full novel, the main concern would be time. We would need a huge crowd to write each paragraph, an even bigger crowd to aggregate them, and an incredible amount of iterations. This would require a ridiculous amount of time, as each dataset needs to be put through code for aggregation, and then we would need to create each HIT. If it were possible to automate this process, then the unnecessary time and effort will not be wasted.

If we were to scale up and possibly do this for a much longer book, we could do a cost analysis of that. Online, I found that popular books ranged from 4,000 to 8,000 sentences (Sense and Sensibility: 5,179 sentences, The Adventures of Tom Sawyer: 4,882 sentences, A Tale of Two Cities: 7,743 sentences). If we wrote a 5,000 sentence book and each person wrote 2-3 sentences (most of our workers wrote that many), then we would need approximately 2,000 HITs for each, creating and rating the story. Assuming we pay the workers $0.05 for quality control and creating the story, it would cost 10 workers*0.05*2000 + 30 workers*0.05*2000 = $4,000 to write a book. It would also take a while to write the book. If this process was entirely automated, we would need 2000 * 1411 seconds + 2000 * 1635 seconds = 70 days, 12 hours, 13 minutes, and 20 seconds. Considering it takes some authors years to write a story, this is an extremely fast process. We investigated how much it would cost to write a book and a typical book with 5,000 sentences would cost approximately $4,000 and 70 days, 12 hours, 13 minutes, and 20 seconds. Considering it takes some authors years to write a story, this is an extremely fast process. How do you ensure the quality of what the crowd provides? We were extremely worried about workers typing nonsense into the text box. That would completely ruin our project so we first did a test run with two cases. One had it so there was no character minimum for the workers to input and the other had a minimum of 75 characters. In the one with no minimum, we saw that only one out of the ten stories had data that made sense (with no garbage characters). However, when we had a minimum of 75 characters, we had seven stories that weren’t garbage characters and were actually relevant to our project. As a result, we went with the 75 minimum character process for our entire process. By creating an aggregation model that attempts to pick out the best page from all the submissions. We explain the details in the question below. In addition, we also wrote a code in VBA that showed us any submissions that had curse words in the data that we were easily able to remove since such language should not be allowed in a children’s book. Firstly, to ensure that our workers were writing the required length of text, we were able to create a textbox in CrowdFlower that only took inputs of 75 characters to 200 characters. It then became a concern that they would write nonsense in the text box to get their money as fast as possible. We thought about writing test questions, but there seemed to be nothing we could ask that was relevant or helpful to weed out bad workers. We decided that our quality control would essentially be our aggregation model. The aggregation model is quite simple. We take whatever place the story is currently at, and then we ask for more submissions through CrowdFlower. These submissions are considered the next page of the story. We then take those submissions and put them through a rating task. The rating task presents the story along with one new submission. So different workers of the rating task will see the same story, but may see a different additional page. Their job is to determine which additional page makes it into the story. They do this by rating the new page on a scale of one to five (with five being the best) five different categories: 1) relevance to the story 2) interest 3) child-appropriate 4) readability (English, grammar, comprehensible) 5) thought provoking. We have 30 workers do this task. We then take these numbers and average them, so each new submission has five average ratings. We decided, per a TA’s suggestion, to only take pages with child-appropriate to be 3.5 or greater. So we eliminated submissions with an average child-appropriateness rating of less than 3.5. With the remaining submissions, we took the sum of the five averages, and the submission with the highest total became the new page. This page officially becomes part of the story, and the process repeats itself until we are done. In addition, we also wrote a code in VBA that showed us any submissions that had curse words in the data that we were easily able to remove since such language should not be allowed in a children’s book. This helped us analyze our data much faster. We wanted to make sure that even though we were only focusing on stories above 3.5 for appropriateness, we were not losing out on quality of the overall story. As a result, we ran an analysis on overall quality (average of all categories except appropriateness) vs appropriateness. We also ran a similar analysis on the stories from a curse words perspective. We wanted to see if the overall quality was lower with curse words or without. With the appropriateness filter, we saw that this did not compromise the overall quality of the stories. The equation we got was y = 0.8013x + 0.7758, with a R^2 of 0.78807 where y is overall quality and x is appropriateness. This shows that on average when average appropriateness increases by 1, average total rating would increase by 0.8013. We also saw that the average overall quality with responses that had curse words was 2.41 and without was 3.63. The p value was also 3.03E-11 so the difference is statistically significant. Having our VBA code that identifies the stories with the curse words proves to be useful since stories without curse words have higher quality. https://www.dropbox.com/s/pr7hx4oo7p1i2ox/FP.png?dl=0 If it could be automated, say how. If it is difficult or impossible to automate, say why. We needed actual workers to write the contents of our children’s book. Technically, the process could be automated by generating random words and putting them together. However, we needed contributors to be creative and continue the flow of a story that was provided. Additionally, we wanted to see how people build off of someone else’s work to follow the story or create their own path.

What are some limitations of your project? In our case, each iteration produced a quality sentence, and although the flow of the story wasn’t top-notch, it was enjoyable. However, I can see that one limitation from our current project model would be that if in one iteration, all the paragraphs were bad, and we would have to include the best of the worst. One way to get around this limitation would be to increase the amount of workers producing paragraphs in each iteration. Another limitation was that although we ensured that each response was between a certain range of characters, we didn’t ensure that each response didn’t contain certain words, or maybe contained certain words from the previous paragraphs.

The largest technical challenge was in debugging. I had to step into my code multiple times to see what was wrong, especially since this was the first time I used VBA.

|

|

Best of Penn by

Bianca Pham

, Stefania Maiman

, Tadas Antanavicius

, Nathaniel Chan

, Stephanie Hsu

Vimeo Password: bestofpenn

Video profile: http://player.vimeo.com/video/165397146"

Give a one sentence description of your project. Crowdsourcing Penn student knowledge to determine the best locales for various activities in the Philadelphia area.What type of project is it? A business idea that uses crowdsourcing What similar projects exist? Yelp, Foursquare. All location based but not community based like Best of Penn. How does your project work? UPenn students sign up on Best of Penn and can begin to view lists, create new ones, or add entities to these lists. Once they have contributed enough ratings to boost their credit score, they can unlock other lists. These tasks are all done by the crowd. What is done automatically by us behind the scenes is the calculation of the user’s worker quality (which is based on if the user’s posted content has been flagged) and the entity’s average rating (which is based on users’ worker qualities and the ratings that users give it). Who are the members of your crowd? UPenn students How many unique participants did you have? 95 For your final project, did you simulate the crowd or run a real experiment? Real crowd If the crowd was real, how did you recruit participants? Since our crowd is only made up of UPenn students, all of the data collected from our classmates was useful, relevant, and quality data. On top of what we collected from NETS 213 students, we also sent our site around to our friends and peers via list serves and social media to get the word out to Penn students to contribute. Would your project benefit if you could get contributions form thousands of people? true Do your crowd workers need specialized skills? false What sort of skills do they need? They do not need any particular skill. Credit score of worker does however rely on an individual’s ability to rate certain places/restaurants/etc. Do the skills of individual workers vary widely? true If skills vary widely, what factors cause one person to be better than another? Workers have different experiences and have gone to different places on and off campus Did you analyze the skills of the crowd? true If you analyzed skills, what analysis did you perform? In the previous milestone, we analyzed the number of entities rated vs the number of topics and the number of entities created. This is how we determined a worker’s skill. After getting significant contributions to our project, we were interested in examining the kind of data our workers were contributing. Did most users just submit ratings or just submit new entries? Or did our users typically divide their contributions between ratings and new entries or lists? We were also interested in seeing if we had users that just signed up to browse through the ratings of the different venues. Since this stage of our project was for data collection from our classmates, we assume that most users were making contributions. However, we expect to see a lot more users sign up and make minimal or zero contributions in the future, as our site becomes more robust and full of good recommendations and ratings of the best things to do/see/eat at Penn. To analyze the relationship of between the different kinds of contributions, we looked at each user and the breakdown of their contributions. Next, we created 3 scatter plots where each data point corresponds to a user and the x and y axis correspond to 2 of the 3 kinds of contributions: number of ratings submitted, number of entities created, and number of lists created. There is a positive correlation between number of ratings and number of entities created. For the most part, we found that our users typically contributed a lot more ratings than entities, as expected. The average number of ratings per user is 42.96 while the average number of entities created was 7.98. There wasn’t a strong correlation between number of ratings and number of topics/lists created. This is due to the fact that not many users contributed lists or topics since there was only a total number of 31 lists when we performed our analysis. The plot shows that there are a significant number of users who only contributed ratings while contributing 0 lists. This is also highlighted by the fact that the average number of lists created was 0.65 as opposed to the average 42.96 ratings per person. There also wasn’t a strong correlation between the number of entities created and the number of topics created. Again, we attribute this to the fact that most users rarely or simply did not create a new topic. https://github.com/tadas412/bestofpenn/blob/master/data/analysis/topicsVsRatings.png https://github.com/tadas412/bestofpenn/blob/master/data/analysis/topicsVsentities.png https://github.com/tadas412/bestofpenn/blob/master/visualizations/LoginPage.png https://github.com/tadas412/bestofpenn/blob/master/visualizations/Homepage.png https://github.com/tadas412/bestofpenn/blob/master/visualizations/HomepageLocks.png https://github.com/tadas412/bestofpenn/blob/master/visualizations/TopicPage.png https://github.com/tadas412/bestofpenn/blob/master/visualizations/AddEntityPage.png How the actual credit system works is relatively simple. When a new user signs up, they begin with 0 credits. Each time a user contributes a rating, his/her credit score increases by 0.5. So if a user contributes 4 ratings, their credit goes up by 2. Each user initially can view the top 5 lists even when they have no credits. We also allow each new user to view new lists with limited amount of ratings so that these less popular lists are still able to be accessed to obtain more ratings. As a user’s contributions increase, they begin to unlock more and more lists. The number of lists a user can view is given by the following formula: [unlockedLists = 5 + creditScore]. For example, a user that signs up and contributes 8 ratings can see the original 5 + 8(0.5) = 9 total lists. We believe that the information we are providing to Penn students is valuable to them, and once we get initial contributions for a user, Best of Penn will spike their interest and they will continue to rate and contribute. Once a user opens a topic to view the different entities, it becomes entertaining to contribute your own opinions by quickly clicking a button. From feedback we have gotten from fellow Penn students, we are confident that the simplicity of contributing with the credit system combined with the fact that students are genuinely intrigued by the information we are providing them will ensure the success of our site. Therefore, we predict that with more time and tuning, we will be able to get a better incentive system that can get more users to contribute. But again, we belive the information provided is useful to our users and a lot of the incentive does come from that. Also, since this site provides such information, it is also expected that a high rate of our users will just sign up and browse the different topics without ever contributing anything- a lot like the majority of yelp and tripadvisor users! How do you aggregate the results from the crowd? We aggregated results from the crowd by gathering submissions of new lists and new entity creations. We also aggregated users’ ratings as mentioned before under quality control to update the rankings of the lists. Every user’s contribution to the website is gathered within the platform and information is re-distributed after certain calculations are made for specific pieces of data. Did you analyze the aggregated results? false What analysis did you perform on the aggregated results? NA Did you create a user interface for the end users to see the aggregated results? true If yes, please give the URL to a screenshot of the user interface for the end user. https://github.com/tadas412/bestofpenn/blob/master/visualizations/TopicPage.png Describe what your end user sees in this interface. The aggregated results are shown to the user as ratings for each venue. When a user opens a topic page, they see the ordering of entities based on the aggregated ratings of each entity. What challenges would scaling to a large crowd introduce? Challenges that scaling would produce is most likely taking care of inappropriate content and the amount of data that the website would need to take on. If we added more communities, that would add thousands of more users. Thus, our quality control needs to be even more efficient since we need to now keep track of thousands of users and the data they are providing. Just like the issues Yelp and Foursquare has, we will mainly need to be finding ways to ensure that the information provided is relevant and ensure that our website can handle all of this data. Did you perform an analysis about how to scale up your project? false What analysis did you perform on the scaling up? NA How do you ensure the quality of what the crowd provides? We implemented a flagging system that allows contributors to flag topics and entities that they find inappropriate and/or not relevant. If there are more than 10 flags for any item, then it will no longer show on the page. This ensures that users are making quality contributions. Did you analyze the quality of what you got back? false What analysis did you perform on quality? NA If it could be automated, say how. If it is difficult or impossible to automate, say why. Our website relies on the opinion of Penn students. Since we wanted to create a community based site that is not influenced by the outside opinions of others, we wanted to limit the use to only Penn students. Since all of our data is taken strictly from the thoughts of the students, its impossible to train a machine (in this case) to imitate the views and opinions of the student body. We didn’t want our project to take the views and opinions already out there on the web, but rather the skewed Penn opinions. Did you train a machine learning component? false What are some limitations of your project? Our main limitation would probably be incentivizing a large crowd to contribute. While our project did succeed at this scale, its continued success depends on the contributions and ratings from students for years to come. If Penn students only use our site to look up places to go to, it can easily become outdated and irrelevant (as a lot of Penn projects become after a few years). The best way to keep it as a continued success is to ensure future students continue to contribute and rate so that the data remains up to date and useful for the current students that attend the university. Did your project have a substantial technical component? Yes, we had to code like crazy. Describe the largest technical challenge that you faced: For our user interface, we had to create a scalable, user-friendly website to display our data and to collect user ratings and contributions. It required substantial software engineering because besides the front end that was implemented, we also implemented a lot of back end components. Our entire team worked together and combined our unique skills, but most of us had to learn a new programming language/technology to be able to contribute. Overall though, integrating the backend component with the frontend component was the largest technical challenge we faced.

|

|

BookSmart by

Jose Ovalle

, Nathaniel Selzer

, Eric Dardet

, Holden McGinnis

Video profile: http://player.vimeo.com/video/165390452"

Give a one sentence description of your project. Translating childrens books with the crowd.What type of project is it? Our experiment is more of a mixture of a social science experiment with the crowd and a business idea that uses crowdsourcing. What similar projects exist? There is a lot of money flowing into translation crowdsourcing companies recently, with players such as Duolingo, Gengo, Qordoba, VerbalizeIt, and more, looking to surpass what is possible with only machine translation. How does your project work? Step 1: Scrape books from the children’s library. Jose developed a python scraper specifically for the children library website that downloads the images and saves them into a file. It works in batches and you just need to edit which language you want by changing the id field in the script. Step 2: Use these book images to create hits, each page to be translated by a crowd worker. Step 3: Use the translation data to create quality control hits where workers rate the translations. Step 4: Use the crowd flower output and run aggregated.py script to output the best translations per image as a txt file. Step5: Run the txt file through our book.py script and output books as txt files. Between steps 4 and 5 we ran our analysis scripts and used some command line to get our analysis data. Who are the members of your crowd? Crowdflower workers and students. How many unique participants did you have? 700 For your final project, did you simulate the crowd or run a real experiment? Real crowd If the crowd was real, how did you recruit participants? Crowdflower Would your project benefit if you could get contributions form thousands of people? true Do your crowd workers need specialized skills? true What sort of skills do they need? They need to be able to translate from spanish to english or french to english. Do the skills of individual workers vary widely? true If skills vary widely, what factors cause one person to be better than another? One person may be better than another for one of two reasons. Either they are better versed in the language they are translating from (in our cases spanish/french), or better in english than another worker while maintaining similar skills in the other language. In some cases people may be better at both languages. Did you analyze the skills of the crowd? true If you analyzed skills, what analysis did you perform? We analyze their skills by using our results from the quality control hits we created. This information was used to rate all crowdworkers involved with our translations. We made several charts for each of the translation hits were able to visually compare how skilled our workers were. We were hoping to compare students to crowdworkers but the differences were only marginal at best. Although it is clear that there were less outliers with the students. Less people posting horrible spammy results and less people providing plenty of quality submissions. These graphs can all be found: https://github.com/holdenmc/BookSmart/tree/master/docs/skill_ratings_charts Graph analyzing skills: https://github.com/holdenmc/BookSmart/blob/master/docs/skill_ratings_charts/Screen%20Shot%202016-05-04%20at%209.01.37%20PM.png Caption: Average ratings for each student(each bar is a student) The height is their rating. Did you create a user interface for the crowd workers? true If yes, please give the URL to a screenshot of the crowd-facing user interface. https://github.com/holdenmc/BookSmart/blob/master/docs/Screenshots/example_ui_hit.png https://github.com/holdenmc/BookSmart/blob/master/docs/Screenshots/example_ui_qc_hit.png Did you perform any analysis comparing different incentives? false If you compared different incentives, what analysis did you perform? How do you aggregate the results from the crowd? We aggregated their work ourselves by taking the responses and putting them back into the books. We have an aggregation script that runs some of the weighted and unweighted quality control measures shown in class earlier in the semester. From there we choose the best translations and compile books using another script. Did you analyze the aggregated results? true What analysis did you perform on the aggregated results? We performed analysis on our aggregation methods. We did both weighted and unweighted aggregations based on the quality of both the translator and the rater. Link to folder of images found here: https://github.com/holdenmc/BookSmart/tree/master/docs/qc_charts What challenges would scaling to a large crowd introduce? We automated most of our work so a large crowd would just add more to us. Did you perform an analysis about how to scale up your project? false What analysis did you perform on the scaling up? How do you ensure the quality of what the crowd provides? We had 3 people translate each page of our book. We created a separate crowdflower hit where people(5) could judge people on the quality of the response they gave. For each page, we chose the translation with the best quality to put in the book. Did you analyze the quality of what you got back? true What analysis did you perform on quality? We had both college students and regular crowdworkers translate for us. We compared the quality of students to the quality of regular crowdworkers. We also compared the quality of French translations to Spanish translations. Finally, we found the average quality of the translations we used to see if the quality of our books would be acceptable. This was all tightly correlated to our analysis on skills. Along with this we also found it interesting to graph the quality of translations given by each contributor vs their number of contributions. It showed that in some cases there were clearly people spamming us. Two folders contain all these charts: https://github.com/holdenmc/BookSmart/tree/master/docs/Contribution_vs_Rating_charts https://github.com/holdenmc/BookSmart/tree/master/docs/qc_charts If it could be automated, say how. If it is difficult or impossible to automate, say why. It is possible to complete this task with varying ranges of automation. Firstly you would need to use some sort of machine learner that can recognize text to scrape text from images, and then the scraped text could be translating using an online translator like google translate. Given that the machine learner may not be very efficient, leaving that part to crowdworkers may be worthwhile, and just using google translate on the transcribed text could prove useful. Did you train a machine learning component? false What are some limitations of your project? 2 pages that share a sentence might not flow into each other well, since the sentence will begin with one translator and start with another. This could be fixed by showing 2 pages and having them translate from the first start of a sentence on the first page to the first end of a sentence on the second page. We would also need more translations per page to make a viable product Did your project have a substantial technical component? Yes, we had to code like crazy. Describe the largest technical challenge that you faced: Our book scraper was definitely the largest technical challenge, albeit not too difficult. Making a scraper that fit the childrens book website perfectly took a reasonable amount of time. compiling the books from our qc output was also pretty intense but nothing a few python experts couldnt handle. How did you overcome this challenge? We coded like crazy. For the scraper I based my code on the previous gun article homework that we did. I also used some skills from a python class I took to help me figure out the beautifulsoup4 and get requests. The toughest part was understanding the layout of the different pages and how to get from the main page with a dynamically changing set of books to the pages where all of the images were linked. Diagrams illustrating your technical component: Caption: Is there anything else you'd like to say about your project? Best description of our entire project is our video script, here's a link if you'd like to read more without having to watch our video: https://github.com/holdenmc/BookSmart/blob/master/docs/video_script.txt

|

|

Booksy by

Sumit Shyamsukha

, Jim Tse

, Vatsal Jayaswal

Vimeo Password: nets213

Video profile: http://player.vimeo.com/video/165415780"

Give a one sentence description of your project. Booksy makes learning socialWhat type of project is it? A business idea that uses crowdsourcing What similar projects exist? Discussion forums for MOOCs and Piazza. The trouble with Piazza is that it doesn't preserve questions with each iteration of a course. It's also strictly limited to people enrolled in the course (or people who are given access to the Piazza for the class). Booksy does what Piazza does, and more. Discussion forums on MOOCs have been pretty ineffective for the most part -- while some people do take advantage of them, a large part of MOOC users do not. The part of Booksy that uses the crowd extensively is the annotation and the quality control module. The crowd can upvote or downvote both users and comments, allowing us to use the crowd's judgements as a means for determining the quality of content on Booksy. The aggregation is done automatically, using the lower bound on the Wilson score as a metric. In the future, we plan on adding NLP and ML components that can make more use of the data generated by the crowd on Booksy to improve the QC / aggregation modules of Booksy. Who are the members of your crowd? The members of the crowd are hypothetically anybody trying to read a particular textbook that is on Booksy. It could be people in a particular class at a university or high school, or people reading a particular document for leisure, work, or as required reading. How many unique participants did you have? 173 For your final project, did you simulate the crowd or run a real experiment? Both simulated and real If the crowd was real, how did you recruit participants? We used CrowdFlower to recruit participants from the real crowd. Would your project benefit if you could get contributions form thousands of people? true Do your crowd workers need specialized skills? false What sort of skills do they need? The crowd workers need to be able to read and write in order to use Booksy. Yes, to make meaningful contributions, they may need specialized skills. However, the mere process of using Booksy can be done without any specialized skills -- anybody who is literate can use Booksy. Do the skills of individual workers vary widely? true If skills vary widely, what factors cause one person to be better than another? Yes -- some people have more education, more experience, or more knowledge compared to other people. These people would be better at contributing to Booksy, since they would be able to clarify other people's doubts quickly, as well as provide effective judgements about the quality of other people's contributions to Booksy. Did you analyze the skills of the crowd? true If you analyzed skills, what analysis did you perform? We first allowed Penn students to use Booksy, and allowed them to comment, and rate other users. We then unleashed the real crowd on Booksy, and allowed the crowd workers to rate Penn students and the contributions of Penn students. Through this, we hoped to determine whether there was any correlation between the ratings of Penn students and the ratings of crowd workers. We would expect Penn students judgements to be more reliable, as the sample of Penn students is a self-selective. Our results show us that crowd workers results were, for the most part, not in line with those of Penn students. There could be several reasons for this, the primary reason being that Penn students are a self-selective sample of people with a high degree and quality of education. These assumptions cannot be made of the crowd workers. In this case, we would consider Penn students as the experts. Graph analyzing skills: https://github.com/sumitshyamsukha/nets213-final-project/blob/94c78fc1b4dd99c32bcaa6626f9ce81ae5443304/src/final_analysis/figure_1.png Caption: Correlation between CrowdFlower Worker ratings and Penn student ratings Did you create a user interface for the crowd workers? true If yes, please give the URL to a screenshot of the crowd-facing user interface. https://github.com/sumitshyamsukha/nets213-final-project/commit/7bfc6f5518142f0756b661137570afda78a6e7d5 Describe your crowd-facing user interface. Upvote / Downvote Comment HIT a. they can help other people learn things better. b. they can solidify their understanding of something and also have their doubts cleared c. they can gain a reputation among their contemporaries (as well as people from all over the world) about their expertise on a particular topic d. they could just enjoy having multiple opinions and viewpoints on a particular piece of text they were reading. The way we incentivized the crowd was interesting -- the Penn students using Booksy were doing so because their participation grade depended on it, whereas the crowd was doing so because they were getting paid to do so. While it could also be a viable idea to pay people (experts) to contribute to Booksy in order to increase the overall value of the content, a better idea would be to appeal to their good side without effectively making it a second job for them. The same incentives can be applied to the real crowd -- the real crowd benefits from the site as much as everybody else, and the larger the number of people, the higher likelihood that the quality of content will be higher. How do you aggregate the results from the crowd? We aggregate the results from the crowd by using the lower bound of Wilson score confidence interval for a Bernoulli parameter to determine a confidence score for each comment / user. We then display the crowd's ratings in order from best to worst. An extension in the future would be to truncate the number of results displayed. Did you analyze the aggregated results? true What analysis did you perform on the aggregated results? We created a word cloud of the crowd workers opinions about the Penn student comments. We did this to try and determine what the general sentiment is about the comments (whether it is binary - easy to filter useful comments from useless ones - or not). Graph analyzing aggregated results: https://github.com/sumitshyamsukha/nets213-final-project/blob/4ff76925603dae4e5f72187fe9a6cf3507a7cfcc/src/final_analysis/booksy_cloud.png Caption: Word Cloud of CrowdFlower Worker's Opinions on Comments Did you create a user interface for the end users to see the aggregated results? true If yes, please give the URL to a screenshot of the user interface for the end user. https://github.com/sumitshyamsukha/nets213-final-project/blob/94c78fc1b4dd99c32bcaa6626f9ce81ae5443304/src/final_analysis/UI.png Describe what your end user sees in this interface. The user sees the actual document, the annotated part of the document, the comment made by another user, and the replies for that particular comment. What challenges would scaling to a large crowd introduce? Scaling to a large crowd would introduce the challenge of being able to remove redundant / duplicate comments, and aggregate comments more effectively. If we had near a million comments on a particular part of the text, we should be able to determine what the most relevant comments are for a particular user. Another challenge would be to search through all the comments associated with a particular part of the text, allowing the user to determine if the doubt they are having has come up before. Did you perform an analysis about how to scale up your project? false What analysis did you perform on the scaling up? How do you ensure the quality of what the crowd provides? We use the crowd to do most of the QC for us. Booksy's QC module essentially employs The Wisdom of the Crowd. We allow each user to up-vote and down-vote other users, as well as other user's comments. With a substantial amount of data, we have substantial judgements on a particular user as well as a particular comment. The way we ensure the quality of what the crowd provides is by computing for each comment / user a confidence score, calculated using certain statistical principles. We knew that simply subtracting the number of downvotes from the number of upvotes was ineffective and were looking for a sophisticated measure. We ended up using the metric described by Evan Miller in the following article: http://www.evanmiller.org/how-not-to-sort-by-average-rating.html We also intentionally made both Penn students and crowd workers use our product, in order to ensure a balance of users and avoid selection bias, in order to introduce at least some sub-par quality content on Booksy, in order to verify that the crowd would make sure to get rid of content that was not useful. These were some of the measures we used to ensure the quality of the data provided by the crowd. a. using the crowd's results to determine a confidence score b. using the assumption that longer comments would tend to be more insightful, and thus have a higher level of quality. We used a scatter plot to plot the length of each comment and the quality of each comment. We wanted to determine if our hypothesis had any backing, and if not, how significant was the disagreement of the results with our hypothesis? In other words, what other assumptions could we have made about good comments, in order to be able to filter comments in a more structured and effective manner, without the continuous need for other users to filter out bad content. If it could be automated, say how. If it is difficult or impossible to automate, say why. While we could use information extraction coupled with machine learning to automate this process, I believe it is extremely difficult for a machine to analyze a piece of text and come up with critical questions the way a member of the crowd would. This essentially relies on the human tendency to often be confused and not have a perfect understanding of things, as a machine would. Did you train a machine learning component? false One of the positive outcomes of our project was that a lot of people who used it were able to actually have a better and more efficient reading and learning experience. This is a clear indication of the potential that Booksy has to disrupt the educational technology industry. Another positive outcome of the project is the potential it has in terms of generating data. This could be one of the most important things about the project -- it acts a source of natural language data for questions, comments, discussions, and interactions between tons of people. We could use this data in all sorts of ways with machine learning and natural language processing.

|

|

CCB - Crowdsourced Comparative Branding by

Alex Sands

, Gagan Gupta

, Michelle Nie

, Hemanth Chittela

Video profile: http://player.vimeo.com/video/165398278"

Give a one sentence description of your project. CCB is a tool for designers to receive feedback on their designs.What type of project is it? A business idea that uses crowdsourcing What similar projects exist? http://www.feedbackarmy.com/ https://ethn.io/ These projects do not focus on design only. They focus on usability and and user research - something this project could turn into. 2. Our code automatically creates a task on CrowdFlower for workers to vote on which version they like, and provide comments on each version of the design. 3. Once the above task is completed, our code then automatically creates another CrowdFlower task to serve as one step of quality control. Workers are given sets of comments alongside the corresponding design and asked to rank how useful the comment is on a scale from 1-5. 4. Once this task is completed, our code automatically aggregates results from both tasks, figuring out which design has the most votes, and then giving the top 5 comments for each design based on the ranking in the second task. These results are then displayed on the original website for designers to log in and see. Who are the members of your crowd? Both designers and workers on CrowdFlower. How many unique participants did you have? 104 For your final project, did you simulate the crowd or run a real experiment? Both simulated and real If the crowd was real, how did you recruit participants? Workers on CrowdFlower were recruited through a monetary incentive, being paid to complete each task. Would your project benefit if you could get contributions form thousands of people? true Do your crowd workers need specialized skills? true What sort of skills do they need? The designers ideally have specialized skills in photoshop or some design-editing software, though this is not necessary. The CrowdFlower workers need no specialized skills. They simply have to look at the designs and either provide a comment or rank the usefulness of a comment. https://github.com/hchittela/nets213-project/blob/master/docs/crowdflower_task2.png In the second screenshot, you can see the design of our comment quality control task. For each photo, we show one of the comments that was made on it, and ask crowd workers to select (on a scale from 1 to 5) how helpful the comment is. In the dropdown menu, we also provide examples of what high and low quality comments look like for the crowd workers to reference. CrowdFlower Workers: Workers were incentivized through monetary incentives. They received money for completing the tasks. How do you aggregate the results from the crowd? Our code creates a CrowdFlower task for every 10 pairs of designs that are submitted. We take the results from the first task (voting on which design is better and commenting on both designs) and 1) count which design got the most number of votes and 2) feed the comments into the second task of rating the usefulness of the comments. We take the results from the second task and take the average of all the ratings. We then pick the top 5 comments with the highest averages. The top choice and the 5 comments are then presented back to the designer on our original website. Through the specific vote count, we were able to validate our conjecture that there is almost rarely a design that wins with most of the crowd. Rather, it’s often a split close to 60-40 or 70-30. Second, we noticed in majority vote analysis on the second task, which is running on the aggregate data from the first task, we observed a similar trend of workers ranging in the 0.6 quality score the most and a few outliers in either direction. Landing Page: https://github.com/hchittela/nets213-project/blob/master/docs/landing_page.png Responses Page: https://github.com/hchittela/nets213-project/blob/master/docs/responses_page.png Response Page: https://github.com/hchittela/nets213-project/blob/master/docs/response_page.png Upload Page: https://github.com/hchittela/nets213-project/blob/master/docs/upload_page.png Responses Page: Once the user logs in, they are taken to the Responses page, which displays all designs that the user has submitted. Clicking on one of the designs leads to the response page for that design. Grayed-out designs represent submitted designs that have not yet received the desired number of responses from the crowd and cannot be clicked on. If the user is new and/or has not submitted a response yet, the Responses page states as such and gives a link to the Upload page where the user can upload a design. Response Page: As stated previously, the Response page displays both design options with the option that was considered better underlined by a yellow line. The number and percentage of votes for each option are displayed as well. Lying below this information, the Comments section displays the top 5 most useful comments for each option. Upload Page: In the Upload page, the user can submit a design by inputting various parameters, which are the design job name, the two public URLs for the design options, a description of the design job, and the desired number of responses from the crowd. What challenges would scaling to a large crowd introduce? If it were to get large enough, we would need to adapt our web app to handle the users and would probably require better quality control since we’ll be using such a large number of workers, thus needing to draw from all over the world instead of just US/UK workers. Did you perform an analysis about how to scale up your project? true What analysis did you perform on the scaling up? We ran a comparison on the prices of different platforms to host our web app, depending on the performance we’d need based on different number of users. We compared different options offered by Digital Ocean, a competing platform similar to Heroku, based on the estimated number of users we would expect on our site. We noted that there isn’t much of an aspect of scaling up the CrowdFlower worker crowd in this scenario. Crowdflower can handle our load, the issue would be with our web app. From our analysis we concluded that it can cost us anywhere from $25-$500/month, however it’s unlikely that we would require the extra performance offered. We were surprised to see however that Digital Ocean had a strictly linear rate of cost increase compared to capacity. It seemed very fair to the customer! How do you ensure the quality of what the crowd provides? Regarding the designers: We were not particularly worried about the quality of the designs, as this was not important for our application to work successfully. Regarding the CrowdFlower workers: It was definitely a concern - the comments and feedback coming from the workers go directly back to the designers. We wanted to ensure that these comments were useful to the designer, and thus created the second task of rating comments to provide only the best comments. We included gold standard questions in each of the CrowdFlower tasks (described in more detail in next question), and the second task is solely for quality control (also described in more detail in next question). We had a few methods of quality control. In both CrowdFlower tasks, we included questions with answers that were clearly obvious. For example, in the first task of choosing which design is better and why, we included two identical designs, where one of them had a misspelled word. If the worker did not choose the correctly spelled design, we disregarded all answers and comments by this worker. In the second task of rating comments, we included a comment that made no sense (was essentially gibberish). If this comment was not rated poorly, we disregarded all answers by this worker. As mentioned a few times above, the second task of rating comments was a method of quality control in itself; by including this task, we are ensuring that only the best comments make it back to the designer. In addition, we limited the responses to US/UK workers to iterate upon a previous mistake. This drastically increased the quality of our comments just by glancing at them; in our first iteration we let workers from all countries submit, and got a lot of comments that made no sense. The only form of quality control we do on the designs is making sure that the URL linking to the design is well-formed and actually links to an image; if it’s not, then the designer is not allowed to submit that URL. In the first CrowdFlower task, we included a gold standard question. This question included two identical photos where one had a misspelled word, making the correctly spelled design the clearly better image. We disregarded the work of any of the workers who chose the design with the misspelled word. In the second CrowdFlower task, we included another gold standard question. This was essentially a gibberish comment, and if they rated this highly, we disregarded this workers work. All in all, only about 8 of our workers missed the gold standard question. If it could be automated, say how. If it is difficult or impossible to automate, say why. The initial step of getting the designs is not automated. This could potentially be automated by pulling designs from a rival website. This would not make sense, however. We would have no way of providing the comments and preference to the designers, and our business model would be based on money from the designers, who are paying to receive feedback. Did you train a machine learning component? false In terms of quality, we believe we gave the designers high quality comments. Each comment we gave back to the designer provided constructive criticism on the design. In addition, we think the jobs were well designed and executed, as only a few workers missed the gold standard questions. The CrowdFlower API is difficult to work with. It is poorly documented and things tend not to work sometimes. Learning how to use this API to create actual jobs was the most difficult part of this process, but in the end we got it working. In addition, we had to figure out some way to know if the tasks were completed, as the CrowdFlower API does not push a notification upon completion (other than the email of course, but we wanted everything to happen automatically). We ended up using a cron job to figure out when the tasks were completed. We did not previously know about cron jobs, so this required a lot of reading and learning until we found the correct solution. The cron job runs every 10 minutes, and uses the CrowdFlower API to get the status of each job, and runs code to aggregate results or set up the new task once each task is completed.

|

|

Crowd Library by

Annie Chou

, Constanza Figuerola

, Chris Conner

Video profile: http://player.vimeo.com/video/165414868"

Give a one sentence description of your project. Crowd Library uses crowdsourcing to translate children’s books from the International Children’s Digital Library between English and Spanish.What type of project is it? A business idea that uses crowdsourcing What similar projects exist? The Rosetta Project is a particularly well-known project which has been ongoing since 1996. How does your project work? 1. We crawled the ICDL website to get the urls of each page for each desired book. 2. Workers transcribed text from photos from output urls. 3. Each page was translated from English to Spanish or Spanish to English by bilingual speakers. And 3 speakers were asked to translate each section. 4. Bilingual speakers were asked to vote for the best translation for each section. 5. We used a script to compare the translations to google translate and got a similarity score for each hit response. 6. The best translations for each section were put together into a single translation for the entire book. We did this by choosing the translation that got the lowest(best) sum of points (1pts-1st, 2pts-2nd, 3pts-3rd). We broke ties by choosing the translation with the lowest similarity to google translate. Translations from English to Spanish, or Spanish to English. Votes on the best translations for a given page. Cleaned (for grammar, spelling, punctuation, etc.) versions of the best translations.

Spanish transcription: https://github.com/choua1/crowd-library/blob/master/screenshots/transcribe_hit_span.png English to Spanish translation: https://github.com/choua1/crowd-library/blob/master/screenshots/translate_hit_eng-span.png Spanish to English translation: https://github.com/choua1/crowd-library/blob/master/screenshots/translate_hit_span-eng.png English to Spanish vote: https://github.com/choua1/crowd-library/blob/master/screenshots/rate_hit_eng-span.png Spanish to English vote: https://github.com/choua1/crowd-library/blob/master/screenshots/rate_hit_span-eng.png Spanish transcription: Users were provided with 3 links to pages of children’s books from the ICDL. They were asked if there was text on the page or if the link was broken and then to transcribe the Spanish text from the page. English to Spanish translation: Users were provided with 3 blocks of texts in English transcribed from the original book and were asked to translate them to Spanish. Spanish to English translation: Users were provided with 3 blocks of texts in Spanish transcribed from the original book and were asked to translate them to English. English to Spanish vote: Users were shown the original English text transcribed from the first hit and then 3 Spanish translations. For each translation, they were asked rank it 1st, 2nd, or 3rd. Spanish to English vote: Users were shown the original Spanish text transcribed from the first hit and then 3 English translations. For each translation, they were asked rank it 1st, 2nd, or 3rd. The transcriptions for the spanish pages gave much better results and this might be because they were more intrinsic motivation. For example someone that is learning spanish might have more incentive to test out their knowledge by finishing our hit. Also our last couple of jobs that required ranking the translations were relatively simple once you got past the quiz. As a contributor you simply had to rank three translations 1st, 2nd, and 3rd. This short easy task is an incentive for workers. How do you aggregate the results from the crowd? We created a hit that asked workers to rank the 3 translations for each page from 1st to 3rd. For each vote, a translation got 1 (1st place), 2 (2nd place), or 3 (3rd place) points. We tallied the points for each translation and picked the translation with the lowest number of points as the best translation that would go into the final compiled book. In the case of a tie, we checked each translation’s similarity to google translate and chose the translation with a lower similarity, under the assumption that the more similar a translation was to google translate, the more likely it was that the worker had used machine translation instead of completely the hit themselves. Did you analyze the aggregated results? false What analysis did you perform on the aggregated results? We manually read through the stories to check for overall flow and consistency. Did you create a user interface for the end users to see the aggregated results? false If yes, please give the URL to a screenshot of the user interface for the end user. Describe what your end user sees in this interface. What challenges would scaling to a large crowd introduce? We would run into many of the same quality control issues as we did with our smaller crowd. For instance, there would still be workers who would likely use google translate to complete the tasks. The cost would obviously increase, so we a larger crowd requires more funding.

We analyzed rows completed rows completed per hour for transcriptions for the internal vs external crowds. The internal crowd was significantly smaller than the external crowd, thus took longer to complete the transcriptions. We want to use this analysis to support our assertion that crowdsourcing is a time-efficient method of translating books, compared to the traditional method of a single translator for a book. We investigated the relationship between crowd size and amount of time it took to complete the transcription task by looking at rows completed per hour. The internal crowd for the english transcriptions did 27 rows per hour while the external crowd for this same hit did 44 rows per hour. The internal crowd for the spanish transcriptions did 85 rows per hour while the external crowd for the same hit did 34 rows per hour. However for the spanish transcription internal job, only one person responded to our hit. This single responder transcribed the data very quickly and would have been an outlier had they been part of a larger crowd. How do you ensure the quality of what the crowd provides? We compare what the translations provided by the crowd against translations of the same text to Google Translate. When we pick the best translation for a given text snippet, we first look at the votes; we believe that voters will be the most helpful in picking out the best translations. If there are multiple translations with high numbers of votes, we break the tie by comparing their similarities to Google Translate. The translation that is less similar is chosen, because translations that are too similar to Google Translate were likely not done by the worker alone. To make sure that we have a good translation for each page we wanted to pick the best translation from the 3 worker translations that we got for each transcription. We did this by creating a hit that ranked each translation and used this to decide the best translation to use in the final aggregation of each book. We also compared it to google translate and broke any ties using this method given the assumption that google translate isn’t as good as human translation. We realized that having a quiz for each job would improve the quality of the results. However, when trying to create a quiz for the transcription job, no one passed the quiz. This was because transcription is very variable and there are many ways to interpret things like white space and punctuation. As a result we got a lot of angry contesters that tried to get these quiz questions removed. After responding to every person with an apology and removing the quiz questions we were able to continue with the hit. However, this resulted in poor quality of the transcriptions and as a result of this problem we decided to forgo the quiz for the translation hits as well. This was not a problem for the ranking hit and we were able to create many test questions and we had a quiz that the workers had to pass in order to begin working on this task. About one third of the workers passed the initial quiz and were able to continue to the job. This helped us trust our results more. Looking back over the project we realized that google translate similarity didn’t always captivate all the results that had just copied and pasted into google translate. We saw that there were many pages where all 3 of the workers responded exactly the same for often very long paragraphs which is probably the result of using an automated system. However, these results often got low similarity scores to google translate. It would have been a better mechanism to check this “cheating” by seeing the similarity between workers on the same page. One way to avoid the issue of people “cheating” and using google translate to complete our tasks would be to forgo the transcription hit altogether. We found that giving workers a text that could easily be copied made it easier to “cheat”. We would have avoided that problem if we had given the translation hit the pictures of the pages instead which make it much more difficult to use google translate and would require almost the same amount of work to transcribe as it would to translate. We analyzed their quality by comparing the similarity scores between the translations and google translate along with the ranking scores that we gave them. This will show us if there is a correlation between how good a translation was ranked and how similar it was to google translate. We hope that there is a negative correlation between the two aspects to support the idea that crowdsourcing translations is better than using google translate. The main question that we were asking is if there is a correlation between the quality of a translation and its similarity to google translate’s output. To elaborate there are a lot of issues with machine translation, because it’s very algorithmic so many times it doesn’t preserve the correct meaning, and grammar. Google translate often translates things very literally and won’t preserve things like sayings or jokes. This issue is particularly important with stories as it is essential that the story flows smoothly from one page to the next. In this case the stories are for children and with rough translations from google translate younger kids might not fully understand its attempt to translate understandable words. Also given that these books have shorter amount of sentences overall each sentence matters more. This means that if google translates messes up a particular sentence it will have a bigger impact in the overall flow of the book whereas a bigger book wouldn’t have this problem since someone could figure out the context from the abundance of other translated sentences. On the other hand workers that are reading a story can understand the flow of the story and as a result provide a translation that can properly portray the essence of the story and make it easily understandable to children. We wanted to see how the similarity scores and the rankings matched up. The graph showed us that the best translations, the ones with the lowest ranks, didn’t have a particular correlation to the similarity scores. This can be attributed that if someone is doing a real translations, sometimes that translation might be the same as google translate and sometimes it might use completely different words to convey the same meaning. On the other side of the spectrum we found that the lowest scoring translations weren’t really getting high percentage similarity against google translate. Instead they were getting very low similarity scores which often meant that they had barely made an attempt at a good translation. This often meant just writing a single letter and calling it a day or just copying the original text and pretending that it was translated. The conclusion that we reached from this was that instead of correlating low similarity scores to good quality instead having no correlation at all meant higher quality. If it could be automated, say how. If it is difficult or impossible to automate, say why. Translations can be automated, but we believe that, given the current state of machine translation, humans are still better at preserving meaning and clarity when translating. Did you train a machine learning component? false What are some limitations of your project? We assumed that a high similarity to google translate would indicate a lower quality translation, but because we were translating children’s books, the simpler vocabulary and grammar may have been a confounding variable we had not previously considered. In the case of children’s books, google translate may not do as poor a job as it would otherwise because the sentences are more straightforward and easier to translate using machine translation. If this were the case, we may not necessarily have picked the best translation when aggregating our translations. Did your project have a substantial technical component? No, we were more focused on the analysis. Describe the largest technical challenge that you faced: Aggregating the pages of children’s books as well as getting the similarities of our translations compared to google translate. How did you overcome this challenge? We wrote a web scraper for the first time. We used things like xpath to navigate html pages and get the sections that we needed to get the urls for the pictures. We also used a language processing library to get the similarities between two text files. We also tried to write a script to get the google translate output but google’s api ended up being more of a hassle and we found an easier way to achieve the same goal using google sheets which has a built in function to translate cells into whatever language you want. Diagrams illustrating your technical component: Caption: Is there anything else you'd like to say about your project? </div> </div> </div> </div> </td> </tr>

|

|

CrowdGuru by

Junting Meng

, Neil Wei

, Raymond Yin

Vimeo Password: nets213

Video profile: http://player.vimeo.com/video/165397039"