Final Project

The final term project will be a self-designed project created by the students in consultation with the professor and the TAs. The project will be done in 5 parts beginning mid-semester. Each of these will have an associated set of deliverables. Together these parts will be worth 45% of your overall grade. The project must be done in teams. The minimum team size is 4, and the maximum size is 6. All team members will receive the same grade on the project.

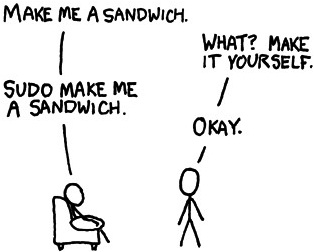

The term project is open-ended. We have some guidelines on what the project must contain – for instance, it must use a crowd, it must have a quality control and an aggregation component, and it should include either a machine learning component or human-computer interaction component, and it must include an analysis – but there is a huge range of what you might do. Check out some of the example final projects below for some models of what constitutes a good project. They show the scope of what you’re required to do, and the range of what is possible.

These example projects range from something that might be a viable business idea that uses the crowd, to a social science analysis of media portrayal of politicians, to a creativity platform for generating children’s books using the crowd.

Here are the different deliverables required for the final project:

- Part 1: Form a team and brainstorm 3 ideas – This is worth 7% of your overall grade.

- Part 2: Select an idea and start implementing its components – This is worth 7% of your overall grade.

- Part 3: Develop a working system that is capable of collecting data – This is worth 7% of your overall grade.

- Part 4: Conduct a preliminary analysis of your data – This is worth 5% of your overall grade.

- Part 5: Analyze your results and produce a video about your project – This is worth 19% of your overall grade.

Example final projects

Here are 3 examples of my favorite final projects from past classes. You can also check out all of the the final project videos from the Spring 2016 class, the Fall 2014 class, and the Fall 2013 class.

Crowdsourcing for Personalized Photo Preferences by

Ryan Chen

Video profile: http://player.vimeo.com/video/165376216"

Give a one sentence description of your project. Crowdsourcing for Personalized Photo Preferences solves the issue of gathering personalized photo ratings - ratings similar to the tastes of a certain individual.What type of project is it? Human computation algorithm What similar projects exist? Currently, all Penn social media accounts are managed by one person, Matt Griffin. Matt goes through hundreds of photos each day and selects the top 3 photos to be posted onto the Penn Instagram. Meanwhile, a few university’s social media departments have begun to utilize the crowd. For instance, Stanford’s social media team finds article that mention Stanford professors in the news, and asks the crowd to determine whether the professor was mentioned in a positive or negative light, and whether the social media team should draft a response to the issue. Our inspiration for this project of crowdsourcing for personalized photo preferences was a research paper that Professor Callison-Burch recommended: A Crowd of Your Own: Crowdsourcing for On-Demand Personalization (2014). In this study, the researchers compare two approaches to crowdsourcing for on-demand personalization: taste-matching versus taste-grokking. Taste-grokking influences the crowd before their task to show the workers how they’d like photos rated. Taste-matching is more of a laissez-faire approach that lets the workers rate as they wish, then keeps the most similar workers after the fact by computing RMSE scores that measure similarity between the worker and the individual being taste-matched to. Based on the results of the study, we decided to explore taste-matching. Steps: For each worker, using their ratings of photos that have been rated by the person we are taste-matching to, we compute the worker’s RMSE (root mean square error). RMSE measures the deviations of the worker’s photo preferences from the true photo preferences of the person being taste-matched to. RMSE score ranges between 0 and 1. A lower RMSE score indicates a stronger alignment in taste between the worker and person being taste-matched to. A higher RMSE indicates a weaker alignment in taste. The extreme case of RMSE = 0 indicates that the worker agreed on every single photo rating; the opposite extreme of RMSE = 1 indicates that the worker disagreed on every photo rating. Compare photos that workers with low RMSE’s approved of with photos that workers with high RMSE’s approved of. Display photos side by side in front of person being taste-matched to. Count number of instances in which photo chosen by low RMSE worker was preferred to photo chosen by high RMSE worker. Perform a statistical test. Who are the members of your crowd? The members of our crowd are workers on Crowdflower, with no constraint on demographics because we want a diverse set of photo preferences. How many unique participants did you have? 176 For your final project, did you simulate the crowd or run a real experiment? Real crowd If the crowd was real, how did you recruit participants? We paid workers on Crowdflower.

Did you perform any analysis comparing different incentives? false If you compared different incentives, what analysis did you perform? How do you aggregate the results from the crowd? After calculating the RMSE, workers whose RMSE scores are less than 0.6 are kept. The closer the RMSE value is to 0, the more similar the worker’s tastes are to the seeded data. The workers whose RMSE scores are greater than 0.6 are tossed and their preferences are disregarded. By keeping the lower RMSE workers, we can see what photos are approved and rejected and we can expect these decisions to be the same as the seeder. (Matt) Did you analyze the aggregated results? true What analysis did you perform on the aggregated results? We performed a population proportion hypothesis test on the aggregated results. We were trying to see if the RMSE was an accurate way of preference matching. In a pool of photos that have been approved by those with high RMSE and those with low RMSE, Chris selected a photo from each pair. 59 out of the 100 photos were from the low RMSE workers. By running a population hypothesis test, we saw that 59 was significant enough to say that low RMSE workers had the better matched preferences. This also implies that the RMSE is an accurate measure for differences. Did you create a user interface for the end users to see the aggregated results? false If yes, please give the URL to a screenshot of the user interface for the end user. Describe what your end user sees in this interface. If it would benefit from a huge crowd, how would it benefit? What challenges would scaling to a large crowd introduce? A large crowd will potentially skew the results as the probability of having workers from different segments of the population give their preferences. We could be potentially missing out on catering to multiple segments of the population.

How do you ensure the quality of what the crowd provides? We compute a RMSE (root-mean-square error) for each worker, which measures the deviations of the worker’s photo preferences from the true photo preferences of the person being taste-matched to. RMSE score ranges between 0 and 1. A lower RMSE score indicates a stronger alignment in taste between the worker and person being taste-matched to. A higher RMSE indicates a weaker alignment in taste. The extreme case of RMSE = 0 indicates that the worker agreed on every single photo rating; the opposite extreme of RMSE = 1 indicates that the worker disagreed on every photo rating. Did you analyze the quality of what you got back? true What analysis did you perform on quality? Is RMSE an accurate way to taste-match? We expect that when being shown 2 photos — one approved by a worker with low RMSE and the other approved by a worker with high RMSE — the person being taste-matched to will choose the photo approved by the low RMSE worker because that worker’s tastes better align with the tastes of the person being taste-matched to. Our results: Chris rated 100 pairs of photos. In each pair, one photo was approved by a worker with low RMSE and the other approved by a worker with high RMSE. Of the 100 pairs, there were 59 instances in which Chris preferred the low RMSE worker’s photo over the high RMSE worker’s photo. On the flip side of the coin, there were 100 - 59 = 41 instances in which the high RMSE worker’s photo was preferred. These were the expected results — that the low RMSE workers’ photos would be preferred. The question is whether 59 versus 41 is statistically significant. What are some limitations of your project? Some sources of error would be workers who randomly selected photo to finish the task quickly. This type of behavior would compromise our results because if he happened to still taste match with the social media manager, his ratings would be considered when they really should not be. For instance, if a worker approves all photos without actually looking at them, he would be compromising the results. Is there anything else you'd like to say about your project?

|

|

Shoptimum by

Dhrupad Bhardwaj

, Sally Kong

, Jing Ran

, Amy Le

Video profile: http://player.vimeo.com/video/114581689"

Give a one sentence description of your project. Shoptimum is a crowdsourced fashion portal to get links to cheaper alternatives for celebrity clothes and accessories.What type of project is it? A tool for crowdsourcing, A business idea that uses crowdsourcing What similar projects exist? CopyCatChic - It's a similar concept which provides cheaper alternatives to interior decoration and furniture. However it doesn't crowdsource results or showcase items and has a team of contributors post blogs about the items. Polyvore - It uses a crowdsourced platform to curate diverse products into a compatible ensemble in terms of decor, accessories or styling choices. However it doesn't cater to the particular agenda of finding more cost effective alternatives to existing fashion trends. Members of the crowds are allowed to post pictures of models and celebrities and descriptions about what they're wearing on a particular page. After that, members of the crowd are allowed to go and look at posted pictures and then post links of those specific pieces of clothing available on commercial retail sites such as Amazon or Macy's etc. On the same page, members of the crowd are allowed to post attributes such as color, material or other attributes about those pieces of clothing for analytics purposes. In the third step, the crowd can go and see one of the posted pictures and compare the original piece of clothing to those cheaper alternatives suggested by the crowd. Members of the crowd can vote for their strongest match at this stage. In the last stage, each posted picture is shown with a list of items which the crowd has deemed the best match. Who are the members of your crowd? Anyone and everyone interested in fashion ! How many unique participants did you have? 10 For your final project, did you simulate the crowd or run a real experiment? Simulated crowd If the crowd was simulated, how did you collect this set of data? Given that it was finals week, we didn't have too many people willing to take the time out to find and contribute by submitting links and pictures. To add the data we needed we basically simulated the crowd among the project members and a few friends who were willing to help out. We each submitted pictures, added links and pictures, rated the best alternatives etc. Our code aggregated the data and populated it for us. If the crowd was simulated, how would you change things to use a real crowd? The main change we would incorporate would be the incentive program. We focussed our efforts on the actual functionality of the application. That said, the idea would be to give people incentives such as points for submitting links which are frequently viewed / submitting alternatives which were highly upvoted. These points could translate into discounts or coupons with retail websites such as Amazon or Macy's as a viable business model Would your project benefit if you could get contributions form thousands of people? true Do your crowd workers need specialized skills? false What sort of skills do they need? The users don't need any specialized skills for participating. We'd prefer they had a generally sound sense of fashion and don't upvote clearly un-similar or rather unattractive alternatives. A specific skill it may benefit users to have is an understanding of materials and types of clothes. If they were good at identifying this a search query for cheaper alternatives would be much more specific and thus likely to be easier. (E.g.: Searching for Burgundy knit lambswool full - sleeve women's cardigan vs Maroon sweater women Do the skills of individual workers vary widely? true If skills vary widely, what factors cause one person to be better than another? As we keep this open to everyone, skills will vary. Of course because majority of the people on the app are fashion savvy or conscious we expect most of them to be able to be of relatively acceptable skill level. As mentioned, fashion sense and ability to identify clothing attributes would be a big plus when searching for alternatives. Did you analyze the skills of the crowd? false If you analyzed skills, what analysis did you perform? Did you create a user interface for the crowd workers? true If yes, please give the URL to a screenshot of the crowd-facing user interface. https://github.com/jran/Shoptimum/blob/master/ScreenShots.pdf Describe your crowd-facing user interface. Each of the 7 screen shots has an associated caption starting from the top left going down in read wise order 1: Home screen the user sees on reaching the application. Also has the list of options of tasks the user can perform on Shoptimum 2: Submit Links : The User can submit a link to a picture of a celebrity or model they want tags for so that they can emulate their style on a budget 3: Getting the links : Users can submit links to cheap alternatives on e-commerce websites and an associated link of the picture of the item as well 4: Users can also submit description tags about the items : eg: Color 5: Users can then vote on which of the alternatives is closest to the item in the original celebrity/ model picture. The votings are aggregated via simple majority 6: A page to display the final ensemble of highest voted alternatives 7: A page to view the analytics of what kinds of attributes about the products is currently trending. 1. Each user who uses Shoptimum gets points for contributing to the application. Different actions have different amounts of points associated with them. For example, if the user is to submit images to be tagged and for which links are to be generated, that would involve between 5-10 points based on the popularity of the image. If the user submits links for an image and tags it, based on the number of votes the user's submissions cumulatively receive that would involve a point score between 20 - 100 points. If the user submitted a tag which ends up on the final highest rated combination (after a threshold of course), that would give the user a bump of 100 points for each item. Lastly, voting for the best alternative also gets you points based on how many people agree with you. As we don't show the vote counts, the vote is unbiased. Eg: If you click the option that most people agree with, you get 30 points, else you get 15 points for contributing. 2. These points aim to translate into a system to rank users based on contributions and use frequency of the system. Should the application go live as a business model, we would tie up companies such as Macy's , Amazon, Forever 21 etc. and offer people extra points for listing their items as alternatives versus just any company. If you collect enough points, you would be eligible to receive vouchers or discounts at these stores thus incentivizing you to participate. How do you aggregate the results from the crowd? Aggregation takes place at two steps in the process. Firstly, when people submit links for cheaper alternatives to items displayed in the picture, all these links are collected in a table and associated with a count which is basically the vote that particular alternative received. We keep a track of all the alternatives and randomly display 4 of them to be voted on in the next step where users can pick which alternative is the closest match with the original image. We small modification in the script could be that the highest voted alternative is always shows to make sure that if it's indeed the best match then everyone get's to decide . Next we aggregate the votes from the crowd incrementing the vote every time someone votes for a particular alternative. Based on the count this alternative shows up in the final results page as the best alternative for the item. Did you analyze the aggregated results? true What analysis did you perform on the aggregated results? One thing we did analyze is that for each of our clothing items that we were finding alternatives for, what color was generally the trend. The idea is simple but we plan to extend it to material and other attributes to that we can get an idea about at any given point of time what is in fashion and what is trending. This is displayed on a separate tab with pie charts for each clothing item to get an idea of who's wearing what and what the majority of posts say people are looking to wear. Conclusions are hard to draw given that we had a simulated crowd. But it would be interesting to see what we get should our crowd increase to a large set of people and of course across seasons as well (The last screenshot shows this) How do you ensure the quality of what the crowd provides? Step 3 in our process deals with QC : The voting The idea is that we ask the crowd for cheaper fashion alternatives, and then ensure the crowd is the one who selects which alternative is the closest to what the original is like. On the voting page, we show the original image side by side with other submitted alternatives. The idea being that people can compare in real time which of the alternatives is most fitting and then vote for that. The aggregation step collects these votes and accordingly maintains a table of the items which are the highest voted. By the law of large numbers, we can approximate that the crowd is almost always right and thus this is an effective QC method as an alternative which isn't satisfactory is likely not to get voted and thus would not show up in the final results. For now we keep the process fairly democratic allowing each user to vote once and that vote would count as one vote only. The idea would eventually be that should users get experience and should the collect enough points via voting for the alternative that's always selected by the crowd then we could possibly modify the algorithm to a weighted vote system to give their vote more leverage. However this does present a care of abuse of power and it would require more research to fully determine which QC aggregation method is more effective. Regardless the crowd here does the QC for us. How do we know that they are right? The final results page shows all the alternatives which were given the highest votes by the crowd and we can see that they're in face pretty close to what is worn by the individual in the original picture. A dip in usage would be a good indicator that people feel our matches are not accurate, thus telling us that the QC step has gone wrong. That said, again quoting the law of large numbers : that's unlikely because on average the crowd is always right. 1. Picture submissions : We could crawl fashion magazines and find pictures of celebrities in their latest outfits to get an idea of fashion trends and have people tag links for those. However we felt that allowing people to also submit their own pictures was an important piece of the puzzle. 2. Getting links to cheaper alternatives : This would definitely be the hardest part. It would have involved us getting instead of links, specific tags about each of the items such as color, material etc and using that data to make queries to various e-fashion e-commerce portals and get the search results. Then we would probably use a clustering algorithm to try and match each picture with the specific item from the submitted image and accordingly post those results which the clustering algorithm deems similar. The crowd would then vote on the best alternatives. Sadly, given the variety of styles out there and the relative complexity of image matching algorithms where the images may be differently angled, shadowed etc, it would mean a large ML component would have to be built it. It also restricted the sources for products whereas the crowd would be more versatile at finding alternatives from any possible source that can be found via a search engine. This step is definitely very difficult using ML, but not impossible. Perhaps a way to make it work would be to monitor usage and build a set of true matches and then train a clustering algorithm to use this labeled image matching data to generate a classifier better suited for the job in the future. It was definitely much simpler to have the crowd submit results. In terms of functionality we managed to get all the core components up and running. We created a clean and seamless interface for the crowd to enter data and vote on this data to get a compiled list of search results. Additionally we also set up the structure analyze more data if needed and add features based on viability + user demand. The project was able to showcase the fact that the crowd was an effective tool in giving us the results that we needed and that the concept that we were trying to achieve is in fact possible in the very form we envisioned it. We saw that for most of the pictures that were posted we found good alternatives that were fairly cost effective and given the frequency of pulls from sites such as Macy's, Amazon or Nordstrom we actually could partner with these companies in the future should the application user base get big enough.

|

|

Critic Critic by

Sean Sheffer

, Devesh Dayal

, Sierra Yit

, Kate Miller

Video profile: http://player.vimeo.com/video/114452242"

Give a one sentence description of your project. Critic Critic uses crowdsourcing to measure and analyze media bias.What type of project is it? Social science experiment with the crowd What similar projects exist? Miss Representation is a documentary that discusses how men and women are portrayed differently in politics, but it draws on a lot of anecdotal evidence, and does not discuss political parties, age, or race. Satirical comedy shows - notably John Stewart from the Daily Show and Last Week today with John Oliver and the Colbert report slice media coverage from various sources and identify when bias is present. The crowdworkers were tasked with finding a piece of media coverage - url/blog/news article, and identifying three adjectives that were used to describe the candidates. After the content was generated from the crowdworkers - we analyzed the data by using the weight of the descriptors. Visually appealing for presenting the data was a Word Cloud - therefore for each candidate the word clouds were generated. The next step is analyzing the descriptors per the candidates - by looking at which words had the highest weights to confirm/deny biases in the representation of the candidates. Who are the members of your crowd? Americans in the United States (for US media coverage) How many unique participants did you have? 456 For your final project, did you simulate the crowd or run a real experiment? Real crowd If the crowd was real, how did you recruit participants? We had to limit the target countries to only the United States because we wanted a measurement of the American media coverage. They had to speak English - and as we wanted various sources we limited the amount of responses to 5 judgement and a limit of 5 ip addresses. Anyone who could identify an article on the coverage of a candidate and have literacy to identify adjectives were part of the crowd. Would your project benefit if you could get contributions form thousands of people? true Do your crowd workers need specialized skills? true What sort of skills do they need? Speak English, know enough English syntax to identify adjectives. Do the skills of individual workers vary widely? true If skills vary widely, what factors cause one person to be better than another? They need to identify which words are adjectives for the candidate versus strictly descriptors. (For example - for Marco Rubio wanting to gain support for the Latino Vote, the word 'Latino' is not an adjective describing Rubio, but rather the vote - therefore this is not bias in his descriptor). Did you analyze the skills of the crowd? true If you analyzed skills, what analysis did you perform? We opened up the links to their articles in the CSVs, and searched for the adjectives that they produced were in the article. We also looked at the rate of judgments per hour - and saw if any of the responses were rejected because the input was less than 10 seconds (ie the crowdworker was not looking for adjectives used in the article). We looked at the quality of the results by looking at the CSVs and seeing if any of the users repeated adjectives (trying to game the system) and opening up the links to see if they were broken or not. We reached the conclusion that paying the workers more had the judgements per hour increase, the satisfaction and even the ease of the job increase in rating. For adjectives - because of the simplicity of the task even though workers could repeat adjectives we looked at the results and there were very few repeated adjectives per user response. Those that put non-legible letters were taken out of the word clouds. Did you create a user interface for the crowd workers? true If yes, please give the URL to a screenshot of the crowd-facing user interface. https://github.com/kate16/criticcritic/blob/master/actualHITscreenshot.jpg Describe your crowd-facing user interface. It asks for for the url - and provides an article that the group decided is an adequate example article that the user may look at. Also - our interface provides the workers with three adjectives that were from the example article, so there is no confusion to what the expectations of the work is. Lastly, there are three fields for adjectives 1, 2, and 3 and a field for the url. Therefore - we upped the pay to 10 cents for the job and the responses increased to 5 a day with increased satisfaction of pay to 4.2/5 and difficulty to 4/5. The increased responses were after the pay incentive. We looked at the user satisfactions for the jobs and rated ease of job - across the 5 different HITS (one new job for each candidate). At 3 cents the user satisfaction ranged from 3.3-3.5/5 for satisfaction and ease was 3.3/5. After upping the pay to 10 cents the rating increased to 4.2/5 for satisfaction and 4/5 for ease of the job. Also, the rate of responses increased from 1 a day to average of 5 a day per the 5 launched jobs. How do you aggregate the results from the crowd? We had a large list of adjectives that were generated from CSVs for all the candidates, and therefor we inputted the words fields to generate 5 wordclouds that would show the size of the words scaled by the weights of which they were repeated. Did you analyze the aggregated results? true What analysis did you perform on the aggregated results? We analyzed the words by looking at the descriptors and finding the recurring themes of the word associations. Also we looked to weed out duplicate adjectives that were aggregated by all the forms of media. Did you create a user interface for the end users to see the aggregated results? false If yes, please give the URL to a screenshot of the user interface for the end user. Describe what your end user sees in this interface. If it would benefit from a huge crowd, how would it benefit? It would benefit from a huge crowd by creating large sample size of overall media coverage - therfore workers could pull in videos from youtube, blogs, new articles from New York times, Fox, twitter handles and feeds - with more crowd we have a larger pull and representation of the broader spectrum that is media. And with more adjectives and language that is used gnerated we can weigh the words used to see if indeed there is different portrayals of candidates. What challenges would scaling to a large crowd introduce? There would be duplicated sources and urls (which could be deduped like in one of the homeworks) but there would be a huge difficulty in ensuring that urls are not broken - that they are actual articles and that the adjectives are actually in the articles portrayed. A representation in media can be any url or even link to an article therefore the verification of this aspect can again crowdsourced to ask: is this url a representation of a politician, and are the adjectives given actually in the article itself. Did you perform an analysis about how to scale up your project? false What analysis did you perform on the scaling up? How much would it cost to pull and analyze 10,000 articles - which would be 10 cents - to $1,000, per each candidate. Expanding this to only 10 politicians would be $10,000 - therefore if we wanted fuller demographics - a wide spectrum of say, 100 candidates this would be $100,000! This is a very expensive task and scaling up would need to be in a way that is automated. How do you ensure the quality of what the crowd provides? We knew from previous HIT assignments that they would be completed very fast if QC wasn't taken into account. Usuall non-results (fields that were left blank, with whitespace or periods, were put in the fields from countries in Latin America or India). Therefore - we made each question required, and for the url field we made the validator a url link (rejection for empty fields). For the adjectives we limited the fields to only letters. We limited the workers only to US Did you analyze the quality of what you got back? true What analysis did you perform on quality? We looked at the ip locations of the users who answered to see if they were actually from cities in the US, and made a graph distribution to see if they were indeed in the US. We opened the links generated from the CSV files to see if they were actual articles and that they were not broken. Also in the list of CSVs we looked to see if they indeed were adjectives - if there were consecutive repeats from the same user (which we did not include in the word cloud). We determined that because of the limitation to the US the results/judgements came in slower - but the websites were indeed articles and urls to blogs, were actually about the candidates and the adjectives were present. Although at first we were skeptical that the crowd would produce reliable results - the strict QC we implemented this time allowed for true user data we could use for our project. Is this something that could be automated? true If it could be automated, say how. If it is difficult or impossible to automate, say why. It is difficult to generate because at first we used crawlers to generate the links but it produces a lot of broken links - and we wanted an adequate sample size from all sources of media (the entire body of media coverage) instead of say, the New York times opinion section. Also - to automate the selection of adjectives used we'd need to create a program that had the entirely list of English adjectives used in the human language - run the string of words and produce matches to extract the adjectives. Word associations for Obama: Muslim, communist, monarch Word associations for Hilary Clinton: Smart Inauthentic Lesbian. John Boehner's word cloud did not contain any words pertaining to his emotional overtures (unlike the democratic candidates). Sonia Sotomayor and Rubio's cloud had positive word connotations in their representation. Overarching trends: Republicans more likely to be viewed as a unit, characterized by their positive with high weights being conservative. Democrats more characterized by strong emotions - passionate, angry. Per gender: men appeared to be viewed more as straightforward and honest. Women charaterized as calculated and ambitions, perhaps because seeking political power is atypical for the gender.'Cowardly' was more likely to describe men perhaps because of similarly gender pressures. All politicians were described as 'Angry' at some point. We were pleased to find while ageism does exist - it applied to everyone once they reach a certain age and not targeted at certain candidates. https://github.com/kate16/criticcritic

|

|

PictureThis by

Ross Mechanic

, Fahim Abouelfadl

, Francesco Melpignano

Video profile: http://player.vimeo.com/video/115816106"

Give a one sentence description of your project. PictureThis uses crowdsourcing to have the crowd write new version of picture books.What type of project is it? Social science experiment with the crowd, Creativity tool What similar projects exist? None. How does your project work? First, we took books from the International Children's Digital Library and separated the text from the pictures, uploading the pictures so that they each had their own unique URL to use for the Crowdflower HITs. We then posted HITs on Crowdflower that included all of the pictures, in order, from each book, and asked the workers to write captions for the first 3 pictures, given the rest of the pictures for reference (of where the story might be going). We took 6 new judgements for every book that we had. Next, for quality control, we posted another round of HITs that showed all of the judgements that had been made in the previous round, and asked the workers to rate them on a 1-5 scale. We then averaged this ratings for each worker on each book, and the two workers with the highest average caption rating for a given book had their work advanced to the next round. This continued until 2 new versions of each of the 9 books we had were complete. Then, we had the crowd vote between the original version of each book, and the crowdsourced version of each book. Who are the members of your crowd? Crowdflower workers How many unique participants did you have? 100 For your final project, did you simulate the crowd or run a real experiment? Real crowd If the crowd was real, how did you recruit participants? We used Crowdflower workers, most of whom gave our HIT high ratings. Would your project benefit if you could get contributions form thousands of people? false Do your crowd workers need specialized skills? false What sort of skills do they need? They only need to be English speaking. Do the skills of individual workers vary widely? false If skills vary widely, what factors cause one person to be better than another? Did you analyze the skills of the crowd? false If you analyzed skills, what analysis did you perform? Did you create a user interface for the crowd workers? true If yes, please give the URL to a screenshot of the crowd-facing user interface. https://github.com/rossmechanic/PictureThis/blob/master/Mockups/screenshots_from_first_HIT/Screen%20Shot%202014-11-13%20at%203.52.19%20PM.png Describe your crowd-facing user interface. Our crowd-facing interface showed all of the pictures from a given pictures as well as an area under 3 of the pictures for the crowd workers to write captions. Did you perform any analysis comparing different incentives? false If you compared different incentives, what analysis did you perform? How do you aggregate the results from the crowd? We aggregated the results manually. We did so through excel manipulation, where we would average the ratings that each worker got on their captions for each book, and then select the captions of the two highest rated workers and manually moved their captions into the excel sheet to uploaded for the next round. Realistically we could have done this using the Python API, and I spent some time learning it, but with different lengths of books and the fact that the python API returns data as a list of dictionaries rather than a CSV file, it was simply less time-consuming to do it manually for only 9 books. Did you analyze the aggregated results? false What analysis did you perform on the aggregated results? We tested whether the crowd preferred the original books to the new crowdsourced versions. Did you create a user interface for the end users to see the aggregated results? false If yes, please give the URL to a screenshot of the user interface for the end user. Describe what your end user sees in this interface. If it would benefit from a huge crowd, how would it benefit? I don't think the size of the crowd matters too much, anything in the hundreds would work. But the larger the crowd, the more creativity we would get. What challenges would scaling to a large crowd introduce? I think with a larger crowd, we would want to create more versions of each story, because it would increase the probability that the final product is great. Did you perform an analysis about how to scale up your project? false What analysis did you perform on the scaling up? How do you ensure the quality of what the crowd provides? Occasionally (although far more rarely than we expected), the crowd would give irrelevant answers to our questions on Crowdflower, (such as when a worker wrote his three parts of the story as children playing, children playing, and children playing). However, using the crowd to rate the captions effectively weeded out the poor quality. Did you analyze the quality of what you got back? true What analysis did you perform on quality? Well we compared our final results to the original versions of the book, asking the crowd which was better, and the crowd thought the crowdsourced versions were better. 76.67% of the time. Is this something that could be automated? false If it could be automated, say how. If it is difficult or impossible to automate, say why. Can not be automated. Although the aggregation parts could be. What are some limitations of your project? There is certainly the possibility that workers voted on their own material, skewing what may have been passed through to later rounds. Moreover, voters may have been voting between stories that were partially written by themselves and the original versions when they voted on which was better, which would skew results. Is there anything else you'd like to say about your project? |